I am a PhD student under the supervision of Dr. Yanye Lu at Peking University. I also closely collaborate with Dr. Guoqi Li and Dr. Man Yao from the Institute of Automation at the Chinese Academy of Sciences. My research interests primarily include Unified Model, Computational Imaging, and Brain-inspired deep learning.

My recent work primarily focuses on discovering potential inductive biases in unified models, while enhancing these systems through the lenses of pre-training, training based on these biases.

🔥 News

- 2025.06: 🎉🎉 Two first author papers are accepted by International Conference on Computer Vision (ICCV 2025).

- 2025.02: 🎉🎉 Two paper are accepted by IEEE / CVF Computer Vision and Pattern Recognition Conference (CVPR 2025).

- 2025.01: 🎉🎉 One first author paper is accepted by International Conference on Learning Representations (ICLR 2025).

- 2024.12: 🎉🎉 One co-first author paper is accepted as oral by AAAI Conference on Artificial Intelligence (AAAI 2025).

- 2024.04: 🎉🎉 One first author paper is accepted as spotlight by International Conference on Machine Learning (ICML 2024).

- 2023.09: 🎉🎉 One co-first author paper is accepted as poster by Conference on Neural Information Processing Systems (NeurIPS 2023).

- 2023.06: 🎉🎉 One co-first author paper is accepted as poster by International Conference on Computer Vision (ICCV 2023).

📝 Publications

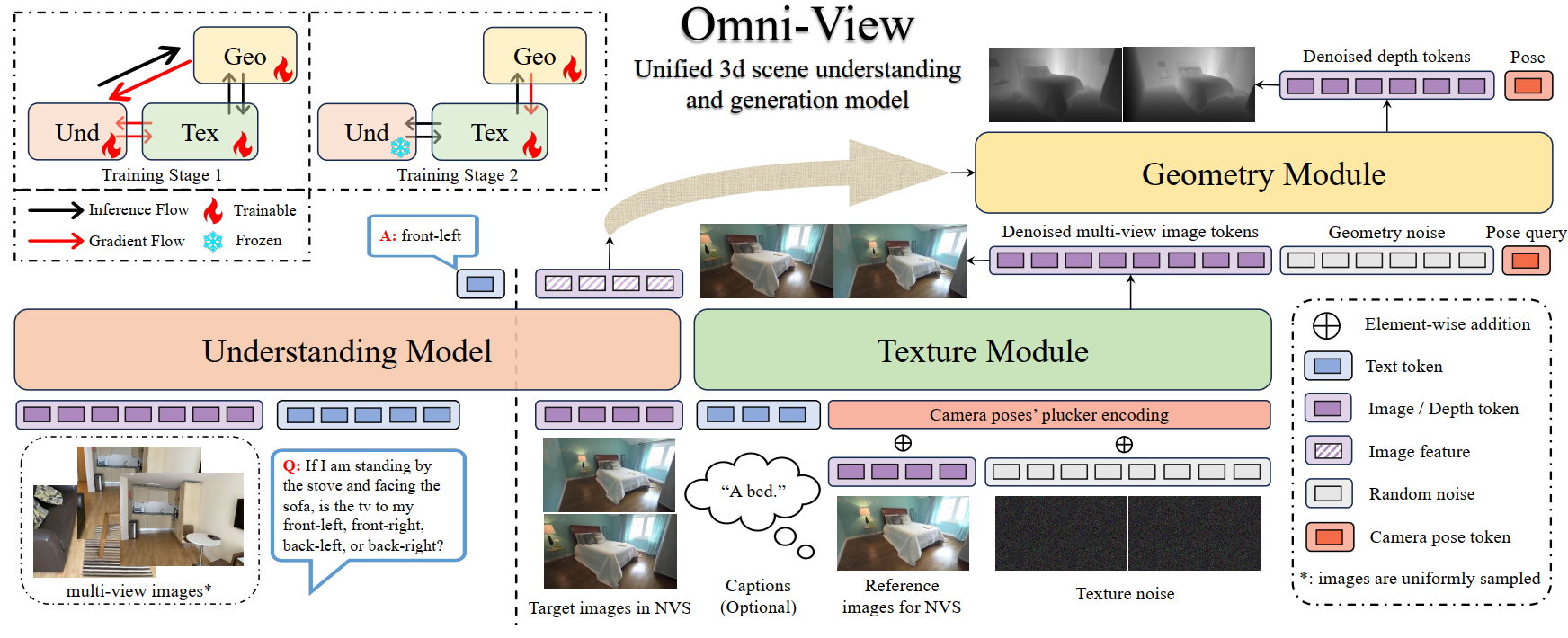

Omni-View: Unlocking How Generation Facilitates Understanding in Unified 3D Model based on Multiview images

JiaKui Hu, Shanshan Zhao♯, Qing-Guo Chen, Xuerui Qiu, Jialun Liu, Zhao Xu, Weihua Luo, Kaifu Zhang, Yanye Lu♯

Arxiv 2025 | Paper | Code | website

This paper presents Omni-View, which extends the unified multimodal understanding and generation to 3D scenes based on multiview images, exploring the principle that “generation facilitates understanding”.

Building upon Bagel, Omni-View consists of an understanding model and a generation model. The generation model is further composed of two specialized modules: one for texture and one for geometry. Trained via a two-stage process, Omni-View shows high effectiveness in scene understanding and novel view synthesis. Crucially, it unlocks the benefits of its generative capabilities to enhance the model’s understanding performance.

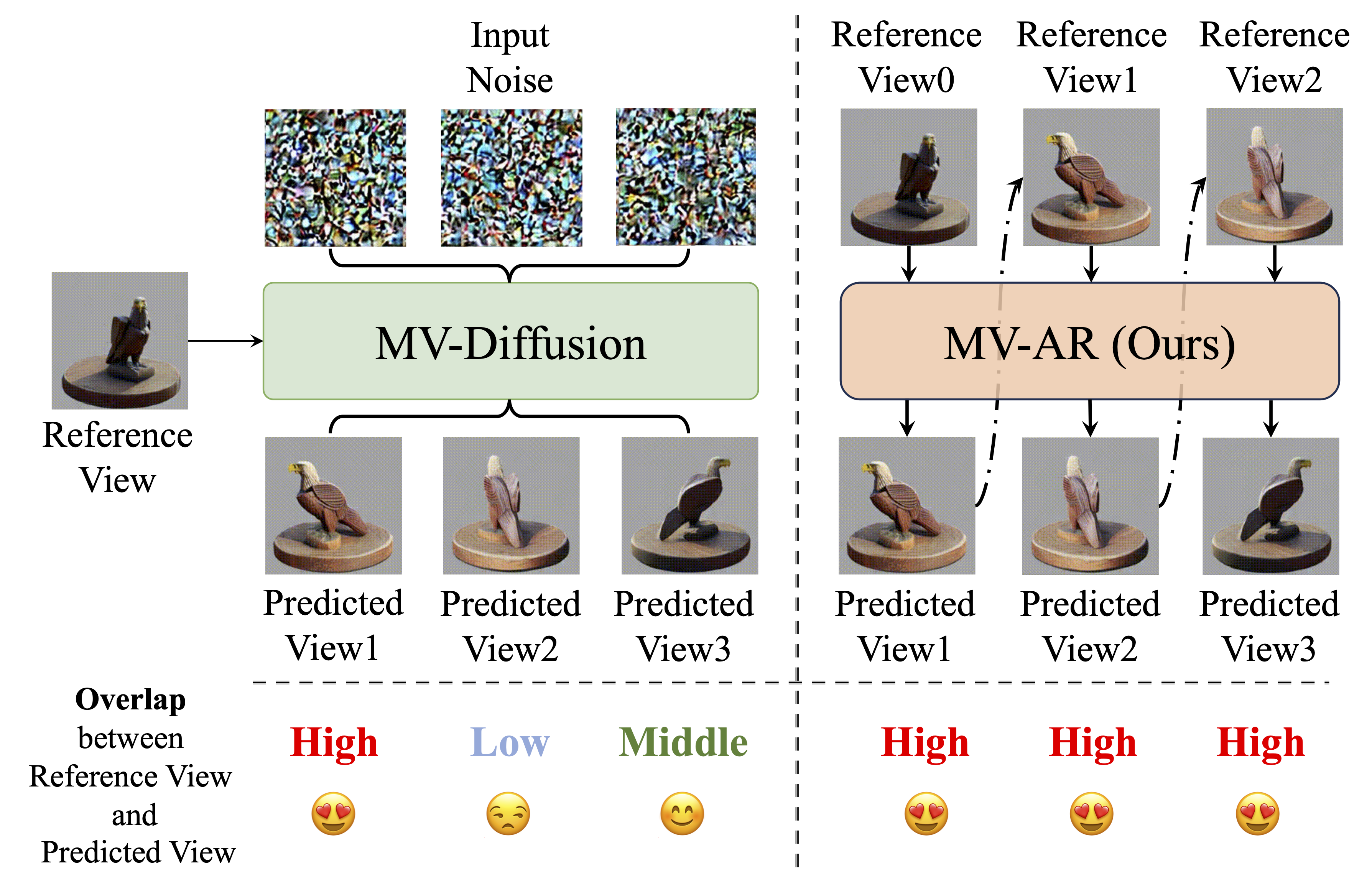

Auto-Regressively Generating Multi-View Consistent Images

JiaKui Hu$^*$, Yuxiao Yang$^*$, Jialun Liu♯, Jinbo Wu, Chen Zhao, Yanye Lu♯

ICCV 2025 | Paper | Code | blog (Chinese)

In this paper, we product the first AutoRegressive-based multi-view image generation model. It’s motivation is:

Diffusion-based multi-view image generation methods use a specific reference view for predicting subsequent views, which becomes problematic when overlap between the reference view and the predicted view is minimal, affecting image quality and multi-view consistency. Our MV-AR addresses this by using the preceding view with significant overlap for conditioning.

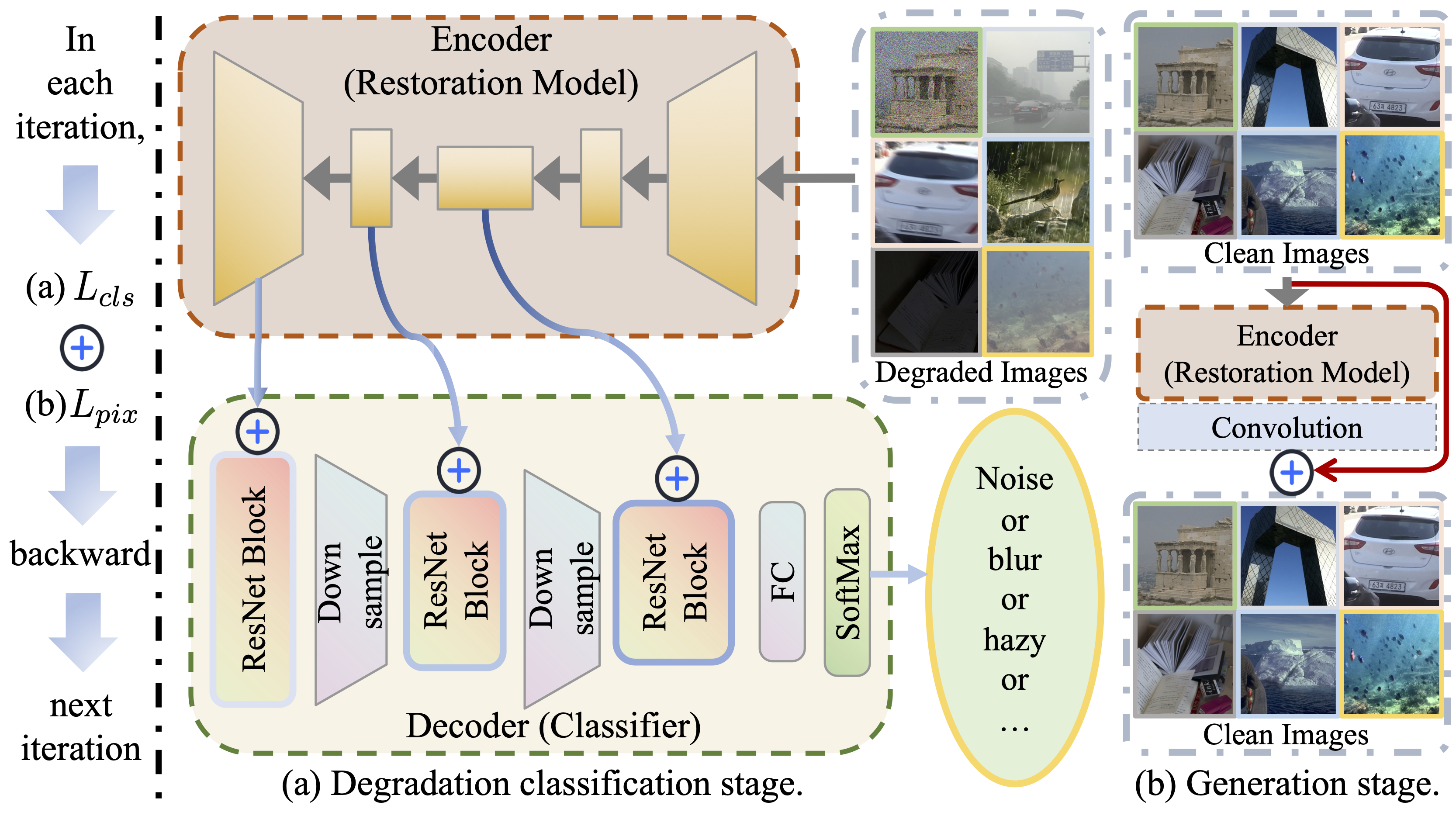

Universal Image Restoration Pre-training via Degradation Classification

JiaKui Hu, Lujia Jin, Zhengjian Yao, Yanye Lu♯

ICLR 2025 | Paper | Code | blog (Chinese)

In this paper, we report three interesting findings:

- Randomly initialized models demonstrate an inherent capability to classify degradation.

- Models trained on the all-in-one task exhibit the ability to discern unkown degradation.

- There is a degradation understanding step in the early training of the restoration model.

Based on these findings, to ensure superior restoration performance, it is imperative that the restoration model attains sufficient degradation classification capabilities before training.

🎖 Honors and Awards

- 2021 National Scholarship, China, Xidian University.

- 2020 National Scholarship, China, Xidian University.

📖 Educations

- 2023.09 - present, PhD student, Peking University.

- 2019.09 - 2023.06, Undergraduate, Xidian University.

📫 Academic Services

Reviewer

Conference:

- IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 2024, 2025, 2026

- Neural Information Processing Systems (NeurIPS) 2024, 2025

- International Conference on Learning Representations (ICLR) 2025, 2026

- International Conference on Machine Learning (ICML) 2025

Journals:

TNNLS, TCSVT, NN

💻 Experience

- 2025.10 - present, Supernova Internship, at TeleAI, China.

- 2025.04 - 2025.10, Internship, at AIDC, China.

- 2024.10 - 2025.03, Internship, at Baidu Vis, China.

- 2023.09 - present, PhD student, at Peking University, China.

- 2021.09 - 2022.01, Internship, at OneFlow, China.